Arize Phoenix – Open-Source AI Observability & Debugging Platform

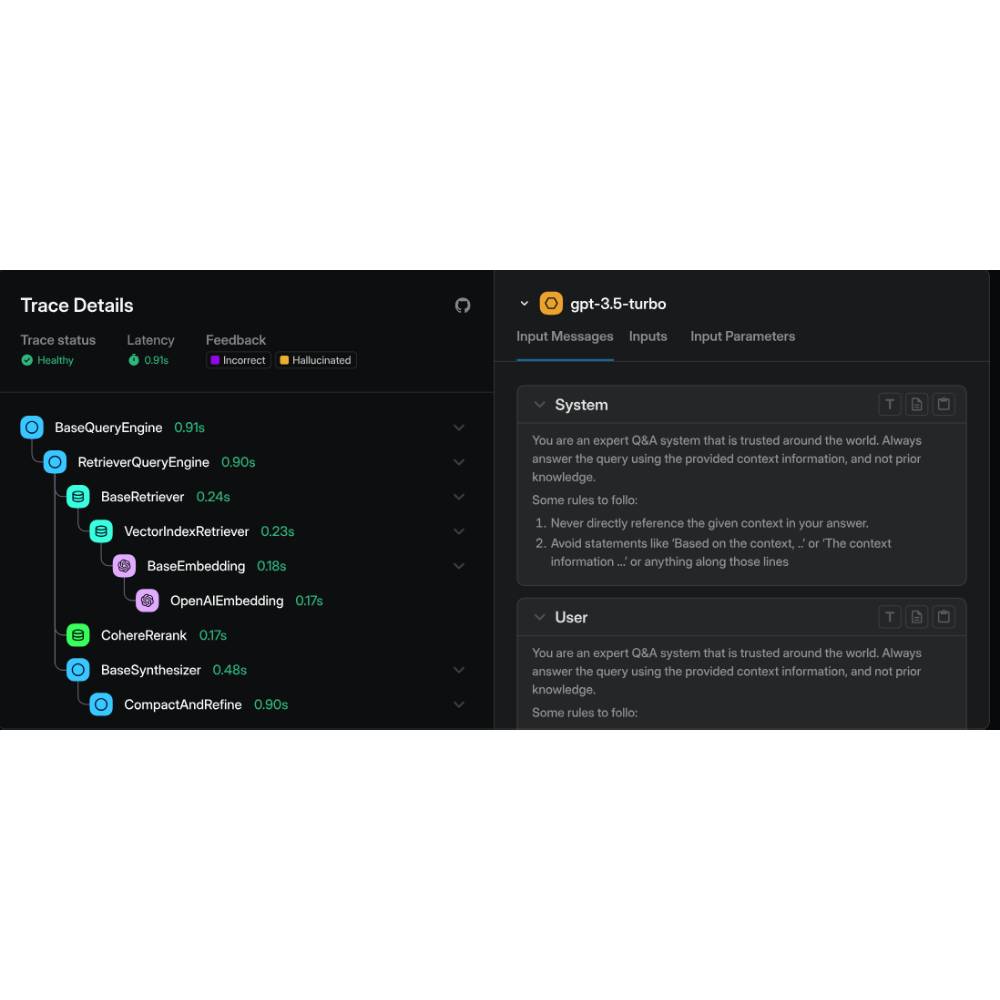

Arize Phoenix is an open-source observability platform designed for monitoring, tracing, and debugging large language models (LLMs) and AI applications. It helps developers, researchers, and enterprises gain visibility into model behavior in production, ensuring performance, reliability, and compliance. With Phoenix, teams can trace interactions, identify failure points, and evaluate outputs for quality and fairness. Unlike black-box AI systems, Phoenix provides transparency and actionable insights to improve AI workflows. Developers can debug LLM responses, enterprises can ensure models meet compliance standards, and researchers can benchmark models against metrics. Startups can monitor AI apps as they scale, avoiding costly errors. Its open-source foundation ensures flexibility, customization, and community-driven improvements. By combining monitoring, tracing, and evaluation, Arize Phoenix makes AI observability accessible and effective, helping organizations build trustworthy, high-performing AI systems.